Configure SD AUTOMATIC1111 webui-user.bat file for optimal performance and avoid insufficient memory and black/green image errors with Nvidia GeForce GTX1660 and GXT1650 and related graphic cards series.

Automatic1111 optimizations for GTX 1660 6GB VRAM

Use the outlined settings here to achieve the best possible performance in your GeForce GTX 1660 6GB video card with Stable Diffusion. All settings shown here have been 100% tested with my Gainward GHOST GeForce GTX 1660 Super video card and 16GB DDR4 RAM.

Use Nvidia driver version 531

For unknown reasons, latest Nvidia drivers introduced a noticeably slowdown in SD image generation with pytorch. The recommended version that works as fast as possible is 531. You can download this version here:

⬇ GeForce Game Ready Driver 531.68 WHQL (853.94 MB)

Automatic1111 webui parameters

To avoid memory issues and black/empty images on these 16XX cards which feature an incompatible FP16 implementation, configure your webui-user.bat file as follows:

set PYTORCH_CUDA_ALLOC_CONF=max_split_size_mb:512 set COMMANDLINE_ARGS=--xformers --no-half --opt-sdp-attention --medvram

With those parameters you can generate a 512×512 image in about 15 seconds using the DPM++ 2M Karras model.

Total progress: 100%|██████████████████████| 25/25 [00:16<00:00, 1.71it/s] Time taken: 15.81s Torch active/reserved: 4010/5244 MiB, Sys VRAM: 6144/6144 MiB (100.0%)

Running stable diffusion on this card without the --no-half parameter results in this error: RuntimeError: Input type (float) and bias type (struct c10::Half) should be the same.

SD webui command line parameters explained

--xformersThe Xformers library provides a method to accelerate image generation. This enhancement is exclusively available for NVIDIA GPUs, optimizing image generation and reducing VRAM usage. If you are using a Pascal, Turing, Ampere, Lovelace, or Hopper card with Python 3.10, you should include this flag.--no-halfDisables the use of half-precision floating-point numbers (16-bit) in computations. Half precision is a lower precision format that uses less memory and can lead to faster computations but may sacrifice some accuracy compared to full precision.--no-half-vaeDisables the use of half precision in the Variational Autoencoder (VAE) component of the stable diffusion tool. Disabling half precision in VAE may affect the performance and quality of the generated images.--opt-sdp-attentionEnable scaled dot product cross-attention layer optimization; requires PyTorch 2.*--medvramBy default, the SD model is loaded entirely into VRAM, which can cause memory issues on systems with limited VRAM. The--medvramoption addresses this issue by partitioning the VRAM into three parts, with one part allocated for the model and the other two parts for intermediate computation. This allows the model to run more efficiently on systems with limited VRAM and helps to avoid out-of-memory errors.max_split_size_mb:512This may or may not help in upscaling processes. Personally, the usefulness of this option with this video card is dubious to say the least.

Fix no module ‘xformers’ error

If your webui shows this message on startup: No module ‘xformers’. Proceeding without it, add this parameter to your webui-user.bat file for the first run only and then remove it:

--reinstall-xformers

After SD webui is initialized, remove this parameter and replace it with --xformers as usual. The warning about missing xformers module will be gone.

Save VRAM in Stable Diffusion AUTOMATIC1111 webui

✅ Limit to 512×512 resolution

Depending on your prompts, checkpoint models, LoRAs, CFG scale and denoising values, SD can produce either great looking images or indescribable abominations. To save time and resources, when you are testing prompts, comparing models and LoRAs, limit your images size to 512×512 px. This is because creating larger images takes much more time, which could potentially produce useless images anyway resulting in wasted effort. My advice is to generate 512×512 images for testing and fine-tuning purposes without exception.

After choosing the best checkpoint and polishing your LoRAs and prompt, you could create a batch of 512×512 images with CFG and denoising variations using the X/Y/Z plot script, and then cherry-pick the ones for enlarging with the Ultimate SD Upscale extension.

✅ Limit batch size to 1

Batch size is how many images your graphics card will generate at the same time, which is limited by its VRAM. Batch count is how many times to repeat those. Batches have consecutive seeds, more on seeds below.

Increasing the batch size will increase VRAM usage as more data needs to be stored during computations. This can lead to a higher memory footprint, potentially pushing VRAM limits and causing out-of-memory errors. However, a larger batch size can also improve training speed if it remains within VRAM capacity. Finding the right balance between batch size and available VRAM is crucial for efficient training

Reclaim more VRAM for Stable Diffusion

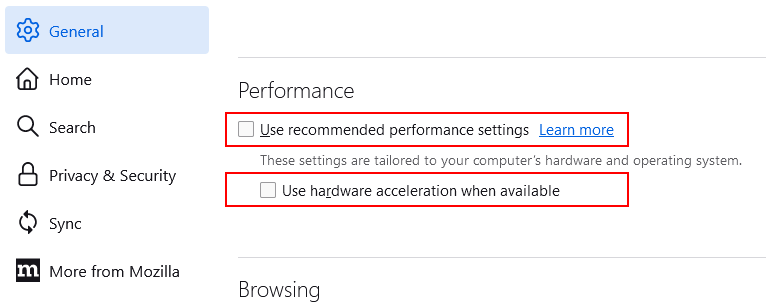

In your browser’s Settings page, make sure to disable hardware acceleration. This means the browser will not use your graphics card VRAM to render pages. In general, this has a negligible effect, as long as your CPU is decent enough to handle smooth video playback et cetera.

Save VRAM by disabling browser’s hardware acceleration

Suggestion

Don’t forget to try ComfyUI

After a lot of frustration with the poorly implemented memory management from automatic1111, I’ve moved to ComfyUI which has none of the memory issues that plagued auto1111. Additionally, you can add all loras you need without worring about running out of memory. You don’t even need to waste time with command line parameters, Comfy will detect your card and apply the best possible settings every time it starts.

ComfyUI’s interface may not be as user-friendly for certain tasks, however, it will help you understand the complete process involved in image generation. You should read the migration guide for automatic1111 users to get started.

Disclaimer

The content in this post is for general information purposes only. The information is provided by the author and/or external sources and while we endeavour to keep the information up to date and correct, we make no representations or warranties of any kind, express or implied, about the completeness, accuracy, reliability, suitability or availability with respect to the website or the information, products, services, or related graphics contained on the post for any purpose. Some of the content and images used in this post may be copyrighted by their respective owners. The use of such materials is intended to be for educational and informational purposes only, and is not intended to infringe on the copyrights of any individuals or entities. If you believe that any content or images used here violate your copyright, please contact us and we will take appropriate measures to remove or attribute the material in question.